- 0 replies

- 1,843 views

- Add Reply

- 0 replies

- 1,826 views

- Add Reply

- 0 replies

- 3,836 views

- Add Reply

- 0 replies

- 1,428 views

- Add Reply

- 0 replies

- 2,191 views

- Add Reply

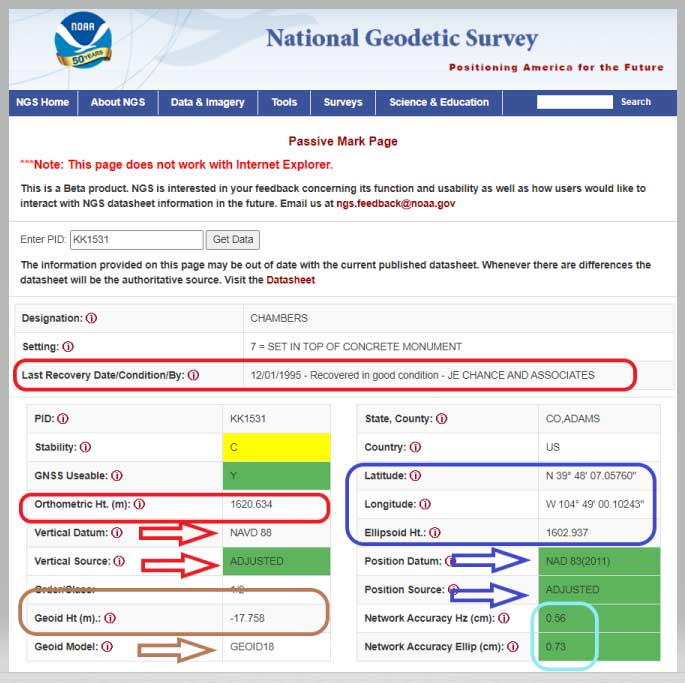

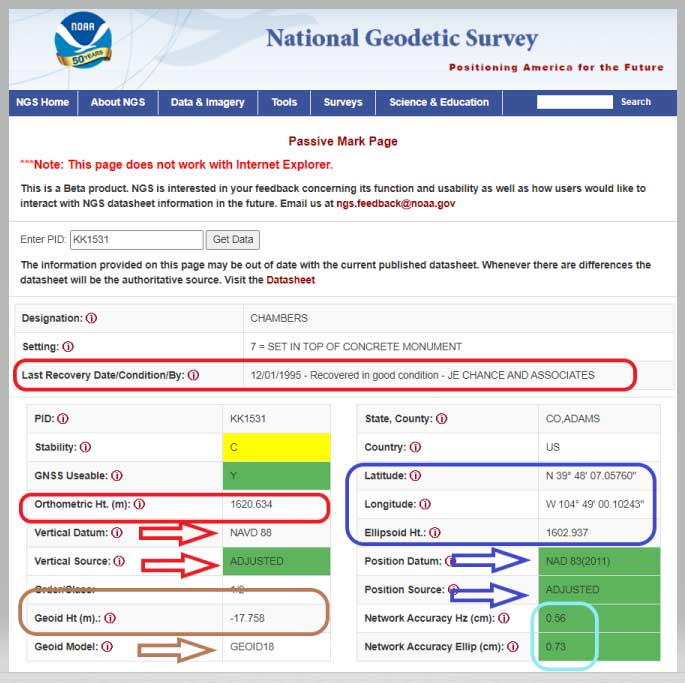

NGS releases beta tool for obtaining geodetic information

By Lurker,

NGS has developed a new beta tool for obtaining geodetic information about a passive mark in their database. This column will highlight some features (available as of Oct. 5, 2020) that may be of interest to GNSS users. It provides all of the information about a station in a more user-friendly format. The box titled “Passive Mark Lookup Tool” is an example of the webtool. The tool provides a lot of information so I have separated the output of the tool into several boxes titled “Passive Mark Loo

NASA Draining the Oceans

By Lurker,

Earth is known as the “Blue Planet” due to the vast bodies of water that cover its surface. With an over 70% of our planet’s surface covered by water, ocean depths offer basins with an abundance of features, such as underwater plateaus, valleys, mountains and trenches. The average depth of the oceans and seas surrounding the continents is around 3,500 meters and parts deeper than 200 meters are called "deep sea".

This visualization reveals Earth’s rich bathymetry, by featuring the ETOPO1 1-

Chrome plugin Earth imagery

By intertronic,

Hello everyone,

a nice plugin for Chrome or Firefox which shows a new satellite imagery (from Google Earth) every time you open a new Tab..

Experience a beautiful image from Google Earth every time you open a new tab.

Earth View displays a beautiful landscape from Google Earth every time you open a new tab.

Chrome :

https://chrome.google.com/webstore/detail/earth-view-from-google-ea/bhloflhklmhfpedakmangadcdofhnnoh?hl=en

Firefox :

https://addons.mozilla.org/en-U

Why your mental map of the world is (probably) wrong

By iron1maiden,

These are some of the most common geographic misconceptions that are both surprising and surprisingly hard to correct.

https://www.nationalgeographic.com/culture/2018/11/all-over-the-map-mental-mapping-misconceptions/

ENVI to Arcmap

By Ghazal,

Hi all,

This is my first time using ENVI and I have applied atmospheric corrections. These are the steps I took:

1- Radiometric correction

2- FLAASH atmospheric correction

3- To eliminate the Negative values and make them between 0 and 1 in data values, I used this formula: (B1 le 0)*0+(B1 ge 10000)*1+(B1 gt 0 and B1 lt 10000)*float (b1)/10000

When I export the corrected later to TIFF, it will be in a 3 band composite but I need single bands to be exported as TIFF so I

-

Forum Statistics

8.8k

Total Topics43.5k

Total Posts