- 2 replies

- 1,721 views

- Add Reply

- 0 replies

- 772 views

- Add Reply

- 0 replies

- 1,009 views

- Add Reply

- 0 replies

- 949 views

- Add Reply

- 3 replies

- 2,493 views

- Add Reply

ESRI and Microsoft

By hariasa,

The other thread was originally about iPod's, and then turned to ESRI and Microsoft so I put it into a new thread if you don't mind.

Couple of years ago, Microsoft became really interested with GIS applications, BING maps, lots of cooperation with GIS suites and also high-end professional software. I can't really name specific ones, but I worked at a Aerial Photography company and most of the software/hardware/drivers they were working with to produce and process the pictures were Microsof

OnYourMap and Blom announce partnership

By Lurker,

OnYourMap and Blom announce partnership and the release of a common high performance mapping platform

OnYourMap and Blom now offer a comprehensive location based platform with services and high quality content for mapping, routing, geocoding and innovative search. This no-compromise platform enables web and mobile portals to boost their presence and revenues while positioning their valuable brand at the forefront and preserving their customers and content.

OnYourMap exclusive internet an

MapMart Cloud Rolls onto the GIS Scene

By Lurker,

MapMart has long been the one-stop shop for traditional geospatial data and imagery acquisition and will remain so. The MapMart Cloud will complement MapMart by offering the same high quality and highly accurate data for consumption via high speed data stream. MapMart Cloud offers hundreds of web services of data sets including Bing Maps from OnTerra Systems, LLC.

MapMart offers a wide variety of U.S. and International geospatial data sets with additional data products coming online daily t

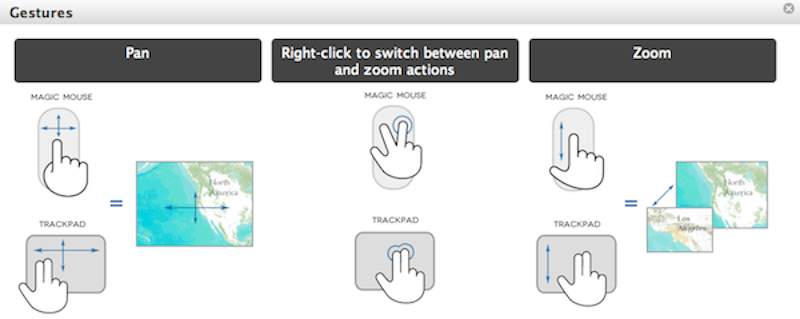

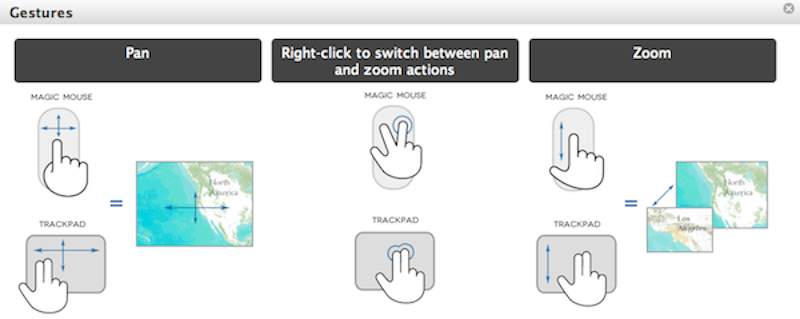

Enhanced map navigation for Mac OS X using the ArcGIS API for JavaScript

By Lurker,

With the release of OS 10.6 Snow Leopard and Lion OS, the Mac Magic Trackpad and Magic Mouse allow you to interact with the system using touch gestures like tap, scroll and swipe. While not all gestures are passed on as native browser events, some gestures emit traditional browser scroll events that enable intuitive and powerful map exploration.

you must have a Mac with OS X 10.6

Wanna study GIS? Click here!

By rahmansunbeam,

Are you planning to study GIS or Remote Sensing? Do you want to be a GIS expert and earn a luck? Here is a huge list of universities and programs from where you can choose what is best for you. Give it a try, maybe the next Goodchild could be you.

US on-campus masters programs

Arizona State University - Masters of Advanced Study in Geographic Information Systems (MAS-GIS)

[hide]

- http://geography.asu.edu/mas-gis

[/hide]

Ball State - Master of Science in Geography, GI Processing Emph

-

Forum Statistics

8.7k

Total Topics43.2k

Total Posts